AI Chatbots and Child Safety

Children chatting with AI without safeguards is now a critical issue. Who is setting the rules?

In 2025, AI chatbots and children safety is no longer a fringe issue. These tools are in children’s hands, bedrooms, schoolbags and browsers every single day.

While AI adoption is skyrocketing across industries, its quiet rise among young users has caught many parents, educators, and policymakers by surprise.

The research makes one thing clear:

❗Children are not just passive consumers of AI — they are active participants. And most of the tools they’re using were never designed with children in mind.

How many children are already using AI?

Recent research shows that AI chatbots are no longer niche. In Ireland, over 26% of 8–12 year olds have already experimented with them, while in the UK, usage among teenagers has grown sharply in the past year.

What’s the main risk for children?

Children aren’t just testing the technology — they’re forming bonds with it. One in three say chatting with AI feels like “talking to a friend,” and for vulnerable groups, that number rises to one in two.

Why AI use without guardrails is dangerous

Unlike friends or teachers, chatbots don’t provide emotional safeguards. They can produce inaccurate answers, expose children to age-inappropriate content, and operate without parental visibility. In fact, 63% of children say their parents have no idea what they do online. This is leaving many chatbot conversations completely hidden.

The bottom line

AI chatbots are shaping young people’s lives before parents, schools, or regulators have caught up. Without clear guardrails, risks like misinformation, emotional over-reliance, and privacy leaks will only deepen.

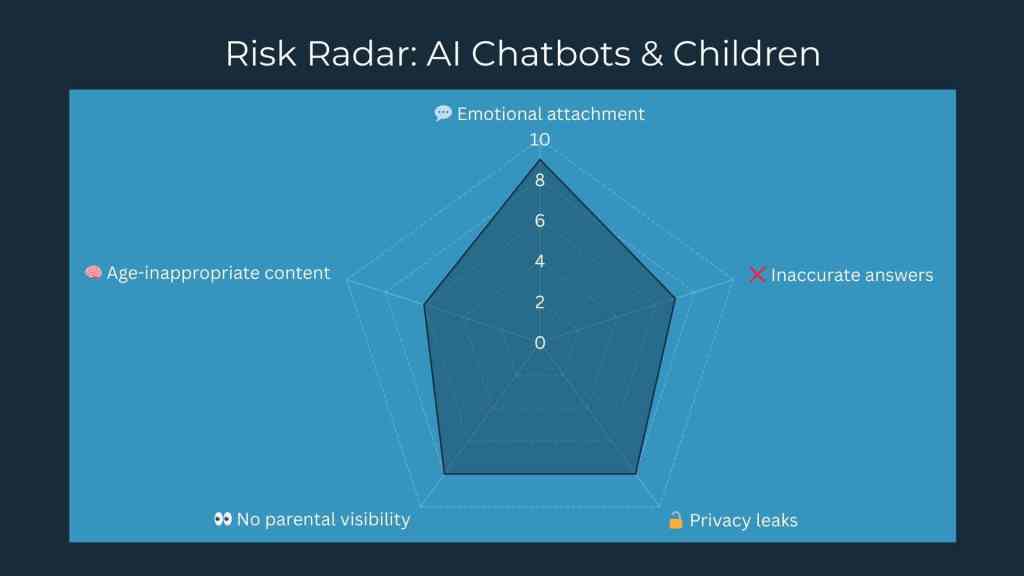

The research identifies five key risks that children face right now. These are shown in the columns below, with scoring to highlight their severity.

💬 67%

Children aged 9–17 in the UK regularly interact with AI chatbots

Many use them for support, conversation, or curiosity.

Source: Internet Matters 2025

🔐 63%

Say their parents cannot see what they’re doing online

This digital invisibility leaves children exposed

Source: CyberSafeKids 2025

❤️ 35%

Say chatting with an AI feels like talking to a friend

Among vulnerable children, this jumps to 50%

Source: Internet Matters 2025

What these statistics reveal about AI chatbots and children safety isn’t just a trend, it’s a tipping point.

AI is already woven into the everyday lives of children, from school projects to late-night curiosity. Yet most platforms lack even the most basic age-appropriate guardrails. They often collect data and simulate empathy without disclosing the risks.

The result? Children are forming relationships with tools they don’t fully understand, while the adults around them remain unaware or under-prepared.

At 360 Marketing, we believe the conversation can’t just focus on innovation. It must also include protection, transparency, and responsible strategy.

AI Chatbots and Children Safety: The Biggest Risks in 2025

Why AI Chatbots Aren’t Childproof. And What’s at Stake

While the numbers speak volumes, the real-world implications of AI chatbot use among children are where the urgent risks appear.

At first glance, AI chatbots may seem helpful. They answer questions, offer companionship, and never judge. But dig deeper, and the cracks show: no emotional context, no ethical boundaries, and no protection protocols.

Key Risks Facing Young Users Today

The biggest risks for AI chatbots and children safety go beyond simple statistics. They affect how children learn, connect, and stay safe online.

Emotional Over-Attachment

Many children describe AI chatbots as “someone to talk to” — with 35% saying it feels like chatting to a friend. Among vulnerable children, this rises to 50%.

While empathy simulation can feel comforting, AI cannot truly understand, comfort, or intervene in times of emotional distress. The risk? Children may develop unhealthy reliance on digital interactions instead of real human connection.

No Age Verification or Filtering

The majority of AI chatbots do not verify a user’s age or adapt their language based on developmental stage.

This means that an 11-year-old using a chatbot may encounter:

- Mature or adult concepts

- Misinformation passed off as fact

- Unfiltered conversations about topics beyond their readiness

There’s no built-in understanding of cognitive development, trauma sensitivity, or moral nuance.

Inaccurate and Misleading Responses

Chatbots don’t always get it right. And children often don’t know the difference between real and generated information.

From health advice to history, AI answers can be wrong or biased by the data it’s trained on. Without fact-checking skills, children are vulnerable to accepting harmful or false information at face value.

Unseen Data Exposure

Children may unknowingly share personal information — like names, locations, or feelings — with tools that log, analyse, or retain data.

Many AI tools have unclear data policies and do not distinguish between a child and an adult user. This poses a serious concern around privacy, GDPR compliance, and digital consent.

No Visibility for Adults

63% of children say their parents can’t see what they’re doing online. And few AI tools offer conversation history or reporting tools for parents or educators.

This invisibility means harmful content, risky behaviour, or emotional distress may go unnoticed until it’s too late. Children are being left to self-navigate un-moderated spaces with no digital supervision.

Want practical steps for your school or organisation?

Guardrails for AI Chatbots and Children Safety

““As digital strategists, we advocate for more than smart tools, we advocate for safe ones. And nowhere is this clearer than in the case of AI chatbots and children safety.”

— Nichola Donnelly, Founder, 360 Marketing

In a world where AI is embedded into homework help, voice assistants, and learning apps, it’s no longer enough to rely on product disclaimers. The responsibility must shift toward design principles like:

- Age-gated experiences

- Ethical UX design

- Built-in safeguards by default

Without these, we’re not just automating, we’re exposing.

“They’re not just talking to AI. They’re trusting it.”

Without guardrails, every interaction risks not just safety but trust itself.

A Wake-Up Call for the Digital Industry

AI is no longer just a back-end tool — it’s a frontline interaction channel. And increasingly, that includes children. For marketers, digital strategists, and platform leaders, the issue of AI chatbots and children safety is now central to trust, compliance, and brand value.

Three Reasons Digital Leaders Cannot Ignore This:

- Trust Is the New Currency

If AI-powered products, platforms, or campaigns are seen as unsafe, trust erodes quickly — with parents, regulators, investors, and the wider public. But brands that prioritise safety and transparency can build loyalty that lasts. - Regulation Is Coming — Fast

The EU AI Act and similar frameworks make it clear: tools must be transparent, ethical, and age-appropriate for young users. Businesses that don’t prepare now will face legal, financial, and operational consequences later. - Ethics Drive Brand Differentiation

In a saturated digital landscape, standing for safety, responsibility, and ethical innovation can become a brand’s strongest differentiator. Companies that adopt “guardrails-first” thinking won’t just protect users — they’ll lead the market conversation.

For marketers and digital leaders, this isn’t just compliance, it’s about strategy. Those who act early will define the standards the rest of the industry follows. The same entity-based approach driving AI SEO transformation is also reshaping safety concerns

What Parents, Educators and Platforms Can Do

From Awareness to Action: How We Can Keep Young People Safe

Awareness of the risks is the first step in addressing AI chatbots and children safety. But awareness without action changes nothing. Parents, educators, and platforms all have a role to play in creating digital guardrails that protect children while allowing innovation to thrive

Here’s how each group can play their part:

For Parents & Guardians (3 key steps)

- Start conversations early → Explain what AI is, encourage kids to share their experiences.

- Use parental tools where possible → Dashboards, filters, or platforms with clear safety features.

- Model responsible use → Show children how you use AI thoughtfully, so they see it as a tool, not a substitute for relationships.

For Educators & Schools (3 key steps)

- Teach AI literacy → Equip students to question chatbot responses and check facts.

- Promote safe, age-appropriate tools → Integrate vetted AI resources into classwork.

- Partner with parents → Share guidelines so home and school reinforce each other.

For Policymakers & Platforms (3 key steps)

- Build age-gating into design → Verify age before access, not just disclaimers.

- Prioritise transparency → Make it clear when a child is talking to a bot, not a person.

- Protect children’s data by default → No profiling, retention, or collection of under-18 interactions.

👪

Parents

At Home

Start the conversation – ask what they’re using AI for and why.

✅ Set boundaries – agree times, spaces, and topics.

🔐 Switch on safeguards – enable SafeSearch, filters, or family dashboards.

👀 Stay curious – review activity together and ask how it felt.

🏫

Educators

In Schools

📘 Teach AI literacy – compare chatbot vs textbook answers.

📝 Set a school policy – require disclosure of AI use in assignments.

👩🏫 Supervise use – keep chatbot tasks structured and time-limited.

📣 Engage parents – share guidance and invite feedback.

🏛️

Platforms & Policymakers

By Design

🧾 Age verification – restrict underage access.

🛡️ Moderation tools – block harmful or adult content.

💡 Limit “friendship” language – disclose that users are talking to a bot.

🔒 Privacy by default – no data retention or profiling of minors.

Share this with your community.

360 Marketing’s Position on AI, Children & Digital Responsibility

“Children don’t need perfect technology but they do need responsible technology. Guardrails are not obstacles to progress. They’re what will make AI a lasting, trusted part of our digital future.”

— Nichola Donnelly, Founder, 360 Marketing

At 360 Marketing, we believe the rise of AI chatbots among children is more than a tech trend, it’s a societal shift that demands demands leadership, transparency, and responsibility.

We guide organisations to balance growth with ethics by:

- Designing campaigns and tools that inspire confidence, not concern

- Embedding ethics into digital strategy

- Anticipating regulatory shifts

What We Stand For

- Ethical Innovation → Growth without compromising values

- Digital Maturity → Helping brands adapt responsibly to AI

- Transparency & Trust → Building long-term credibility

- Guardrails-First Thinking → Safety as the foundation, not the barrier

As digital strategists, we don’t just track trends — we translate complex research (CyberSafeKids, RTÉ, Internet Matters) into actionable guidance, helping organisations anticipate regulation, protect their reputation, and lead responsibly. Responsible innovation sits at the heart of our digital marketing strategy framework.

“Innovation without responsibility isn’t progress, it’s risk.

That’s why 360 Marketing is stepping forward: to guide organisations through the new realities of AI with strategies that balance growth, safety, and trust.”

— Nichola Donnelly, Founder, 360 Marketing

Our Values Framework

Innovation

Guardrails

Trust

Growth

Resources & Further Reading

Stay Informed. Stay Responsible.

360 Marketing is committed to curating the most credible and current research on AI, children, and digital responsibility. Explore these resources to deepen your understanding:

Key Reports & Coverage

- CyberSafeKids Report 2025 – A Life Behind the Screens

- Internet Matters 2025 – Me, Myself & AI

- RTÉ News Coverage – AI Chatbots & Regulation in Ireland

Recommended Reading from 360 Marketing

- [AI Ethics in Marketing: Why Guardrails Build Trust] (internal blog)

- [Digital Maturity in 2025: Preparing for the AI Act] (internal insight page)

- [How to Talk to Children About AI Tools] (new blog/guide)